To keep pace with ever-present business and technology change and challenges, organizations need operating models built with a strong data and analytics foundation. Here’s how your organization can build one incorporating a range of key components and best practices to quickly realize your business objectives.

Executive Summary

To succeed in today’s hypercompetitive global economy, organizations must embrace insight-driven decision-making. This enables them to quickly anticipate and enforce business change with constant and effective innovation that swiftly incorporates technological advances where appropriate. The pivot to digital, consumer-minded new regulations around data privacy and the compelling need for greater levels of data quality together are forcing organizations to enact better controls over how data is created, transformed, stored and consumed across the extended enterprise. Chief data/analytics officers who are directly responsible for the sanctity and security of enterprise data are struggling to bridge the gap between their data strategies, day-to-day operations and core processes. This is where an operating model can help. It provides a common view/definition of how an organization should operate to convert its business strategy to operational design. While some mature organizations in heavily regulated sectors (e.g., financial services), and fast-paced sectors (e.g., retail) are tweaking their existing operating models, younger organizations are creating operating models with data and analytics as the backbone to meet their business objectives. This white paper provides a framework along with a set of must-have components for building a data and analytics operating model (or customizing an existing model).

The starting point: Methodology

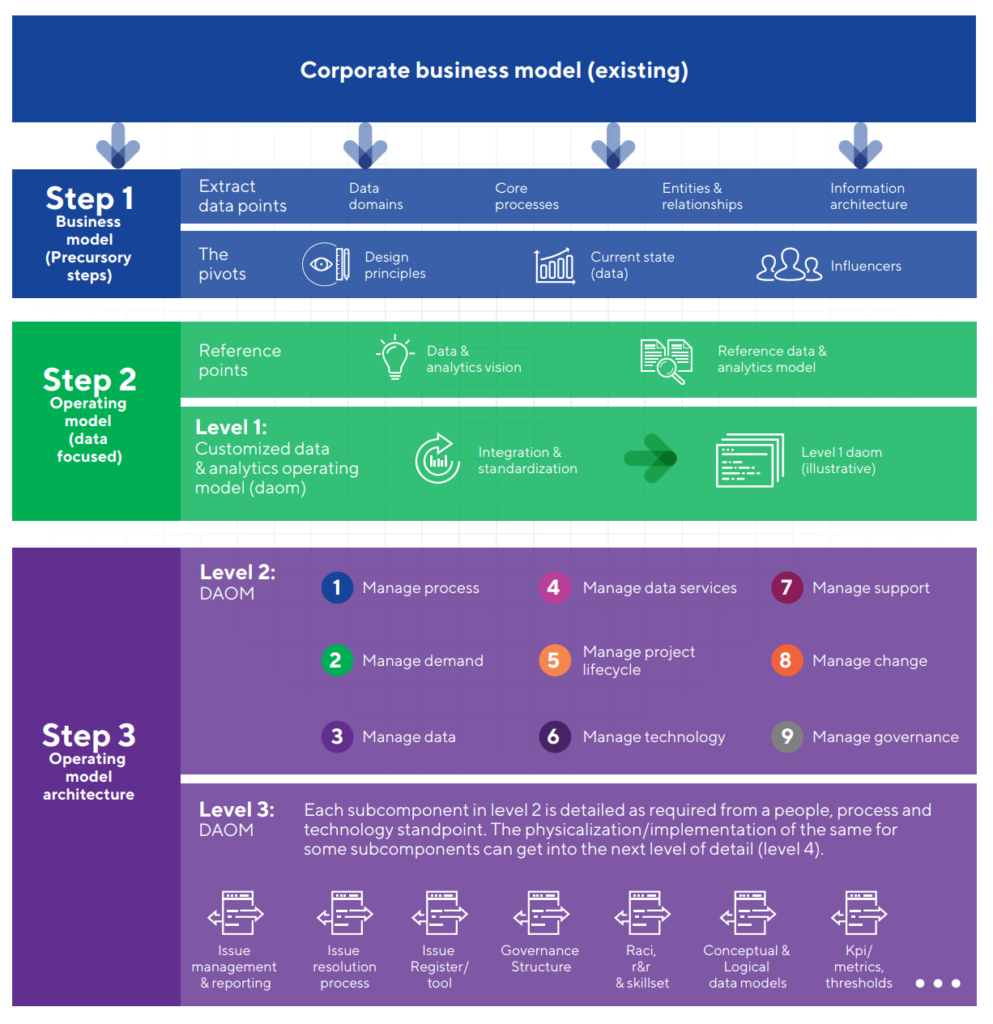

Each organization is unique, with its own specific data and analytics needs. Different sets of capabilities are often required to fill these needs. For this reason, creating an operating model blueprint is an art, and is no trivial matter. The following systematic approach to building it will ensure the final product works optimally for your organization. Building the operating model is a three-step process starting with the business model (focus on data) followed by operating model design and then architecture. However, there is a precursory step, called “the pivots,” to capture the current state and extract data points from the business model prior to designing the data and analytics operating model. Understanding key elements that can influence the overall operating model is therefore an important consideration from the get-go (as Figure 1 illustrates). The operating model design focuses on integration and standardization, while the operating model architecture provides a detailed but still abstract view of organizing logic for business, data and technology. In simple terms, this pertains to the crystallization of the design approach for various components, including the interaction model and process optimization.

Preliminary step: The pivots

No two organizations are identical, and the operating model can differ based on a number of parameters — or pivots — that influence the operating model design. These parameters fall into three broad buckets:

Design principles: These set the foundation for target state definition, operation and implementation. Creating a data vision statement, therefore, will have a direct impact on the model’s design principles. Keep in mind, effective design principles will leverage all existing organizational capabilities and resources to the extent possible. In addition, they will be reusable despite disruptive technologies and industrial advancements. So these principles should not contain any generic statements, like “enable better visualization,” that are difficult to measure or so particular to your organization that operating-model evaluation is contingent upon them. The principles can address areas such as efficiency, cost, satisfaction, governance, technology, performance metrics, etc.

Sequence of operating model development

Current state: Gauging the maturity of data and related components — which is vital to designing the right model — demands a two-pronged approach: top down and bottom up. The reason? Findings will reveal key levers that require attention and a round of prioritization, which in turn can move decision-makers to see if intermediate operating models (IOMs) are required.

Influencers: Influencers fall into three broad categories: internal, external and support. Current-state assessment captures these details, requiring team leaders to be cognizant of these parameters prior to the operating-model design (see Figure 2). The “internal” category captures detail at the organization level. ”External” highlights the organization’s focus and factors that can affect the organization. And “support factor” provides insights into how much complexity and effort will be required by the transformation exercise.

First step: Business model

A business model describes how an enterprise leverages its products/services to deliver value, as well as generate revenue and profit. Unlike a corporate business model, however, the objective here is to identify all core processes that generate data. In addition, the business model needs to capture all details from a data lens — anything that generates or touches data across the entire data value chain (see Figure 3). We recommend that organizations leverage one or more of the popular strategy frameworks, such as the Business Model Canvas1 or the Operating Model Canvas,2 to convert the information gathered as part of the pivots into a business model. Other frameworks that add value are Porter’s Value Chain3 and McKinsey’s 7S framework.4 The output of this step is not a literal model but a collection of data points from the corporate business model and current state required to build the operating model.

Second step: Operating model

The operating model is an extension of the business model. It addresses how people, process and technology elements are integrated and standardized.

Integration: This is the most difficult part, as it connects various business units including third parties. The integration of data is primarily at the process level (both between and across processes) to enable end-to-end transaction processing and a 360-degree view of the customer. The objective is to identify the core processes and determine the level/type of integration required for end-to-end functioning to enable increased efficiency, coordination, transparency and agility (see Figure 4). A good starting point is to create a cross-functional process map, enterprise bus matrix, activity based map or competency map to understand the complexity of core processes and data. In our experience, tight integration between processes and functions can enable various functionalities like self-service, process automation, data consolidation, etc.

Standardization: During process execution, data is being generated. Standardization ensures the data is consistent (e.g., format), no matter where (the system), who (the trigger), what (the process) or how (data generation process) within the enterprise. Determine what elements in each process need standardization and the extent required. Higher levels of standardization can lead to higher costs and lower flexibility, so striking a balance is key.

Creating a reference data & analytics operating model

The reference operating model (see Figure 5) is customizable, but will remain largely intact at this level. As the nine components are detailed, the model will change substantially. It is common to see three to four iterations before the model is elaborate enough for execution.

For anyone looking to design a data and analytics operating model, Figure 5 is an excellent starting point as it has all the key components and areas.

Final step: Operating model architecture

Diverse stakeholders often require different views of the operating model for different reasons. As there is no one “correct” view of the operating model, organizations may need to create variants to fulfill everyone’s needs. A good example is comparing what a CEO will look for (e.g., strategic insights) versus what a CIO or COO would look for (e.g., an operating model architecture). To accommodate these variations, modeling tools like Archimate5 will help create those different views quickly. Since the architecture can include many objects and relations over time, such tools will help greatly in maintaining the operating model. The objective is to blend process and technology to achieve the end objective. This means using documentation of operational processes aligned to industry best practices like Six Sigma, ITIL, CMM, etc. for functional areas. At this stage it is also necessary to define the optimal staffing model with the right skill sets. In addition, we take a closer look at what the organization has and what it needs, always keeping value and efficiency as the primary goal. Striking the right balance is key as it can become expensive to attain even a small return on investment. Each of the core components in Figure 5 needs to be detailed at this point, in the form of a checklist, template, process, RACIF, performance metrics, etc. as applicable – the detailing of three subcomponents one level down. Subsequent levels involve detailing each block in Figure 6 until task/activity level granularity is reached.

The operating model components

The nine components shown in Figure 5 will be present in one form or another, regardless of the industry or the organization of business units. Like any other operating model, the data and analytics model also involves people, process and technology, but from a data lens.

Component 1: Manage process: If an enterprise-level business operating model exists, this component would act as the connector/ Component 1: Manage Process: If an enterpriselevel business operating model exists, this component would act as the connector/bridge between the data world and the business world. Every business unit has a set of core processes that generate data through various channels. Operational efficiency and the enablement of capabilities depend on the end-to-end management and control of these processes. For example, the quality of data and reporting capability depends on the extent of coupling between the processes.

Component 2: Manage demand/requirements & manage channel: Business units are normally thirsty for insights and require different types of data from time to time. Effectively managing these demands through a formal prioritization process is mandatory to avoid duplication of effort, enable faster turnaround and direct dollars to the right initiative.

Component 3: Manage data: This component manages and controls the data generated by the processes from cradle to grave. In other words, the processes, procedures, controls and standards around data, required to source, store, synthesize, integrate, secure, model and report it. The complexity of this component depends on the existing technology landscape and the three v’s of data: volume, velocity and variety. For a fairly centralized or single stack setup with a limited number of complementary tools and technology proliferation, this is straightforward. For many organizations, the people and process elements can become costly and time-consuming to build.

To enable certain advanced capabilities, the architect’s design and detail are major parts of this component. Each of the five subcomponents requires a good deal of due diligence in subsequent levels, especially to enable “as-aservice” and “self-service” capabilities.

Component 4a: Data management services: Data management is a broad area, and each subcomponent is unique. Given exponential data growth and use cases around data, the ability to independently trigger and manage each of the subcomponents is vital. Hence, enabling each subcomponent as a service adds value. While detailing the subcomponents, architects get involved to ensure the process can handle all types of data and scenarios. Each of the subcomponents will have its set of policy, process, controls, frameworks, service catalog and technology components.

Enablement of some of the capabilities as a service and the extent to which it can operate depends on the design of Component 3. It is common to see a few IOMs in place before the subcomponents mature.

Component 4b: Data analytics services: Deriving trustable insights from data captured across the organization is not easy. Every organization and business unit has its requirement and priority. Hence, there is no one-size-fits-all method. In addition, with advanced analytics such as those built around machine-learning (ML) algorithms, natural language processing (NLP) and other forms of artificial intelligence (AI), a standard model is not possible. Prior to detailing this component, it is mandatory to understand clearly what the business wants and how your team intends to deliver it. Broadly, the technology stack and data foundation determine the delivery method and extent of as-a-service capabilities.

Similar to Component 4a, IOMs help achieve the end goal in a controlled manner. The interaction model will focus more on how the analytics team will work with the business to find, analyze and capture use cases/requirements from the industry and business units. The decision on the setup — centralized vs. federated — will influence the design of subcomponents.

Business units are normally thirsty for insights and require different types of data from time to time. Effectively managing these demands through a formal prioritization process is mandatory to avoid duplication of effort, enable faster turnaround and direct dollars to the right initiative.

Component 5: Manage project lifecycle: The project lifecycle component accommodates projects of Waterfall, Agile and/or hybrid nature. Figure 5 depicts a standard project lifecycle process. However, this is customizable or replaceable with your organization’s existing model. In all scenarios, the components require detailing from a data standpoint. Organizations that have an existing program management office (PMO) can leverage what they already have (e.g., prioritization, checklist, etc.) and supplement the remaining requirements.

The interaction model design will help support servicing of as-a-service and on-demand data requests from the data and analytics side during the regular program/project lifecycle.

Component 6: Manage technology/ platform: This component, which addresses the technology elements, includes IT services such as shared services, security, privacy and risk, architecture, infrastructure, data center and applications (web, mobile, on-premises).

As in the previous component, it is crucial to detail the interaction model with respect to how IT should operate in order to support the as-aservice and/or self-service models. For example, this should include cadence for communication between various teams within IT, handling of live projects, issues handling, etc.

Component 7: Manage support: No matter how well the operating model is designed, the human dimension plays a crucial role, too. Be it business, IT or corporate function, individuals’ buy-in and involvement can make or break the operating model.

The typical support groups involved in the operating-model effort include BA team (business technology), PMO, architecture board/group, change management/advisory training and release management teams, the infrastructure support group, IT applications team and corporate support group (HR, finance, etc.). Organization change management (OCM) is a critical but often overlooked component. Without it, the entire transformation exercise can fail.

Component 8: Manage change: This component complements the support component by providing the processes, controls and procedures required to manage and sustain the setup from a data perspective. This component manages both data change management and OCM. Tight integration between this and all the other components is key. Failure to define these interaction models will result in limited scalability, flexibility and robustness to accommodate change.

The detailing of this component will determine the ease of transitioning from an existing operating model to a new operating model (transformation) or of bringing additions to the existing operating model (enhancement).

Component 9: Manage governance: Governance ties all the components together, and thus is responsible for achieving the synergies needed for operational excellence. Think of it as the carriage driver that steers the horses. Although each component is capable of functioning without governance, over time they can become unmanageable and fail. Hence, planning and building governance into the DNA of the operating model adds value.

The typical governance areas to be detailed include data/information governance framework, charter, policy, process, controls standards, and the architecture to support enterprise data governance

Intermediate operating models (IOMs)

As mentioned above, an organization can create as many IOMs as it needs to achieve its end objectives. Though there is no one right answer to the question of optimal number of IOMs, it is better to have no more than two IOMs in a span of one year, to give sufficient time for model stabilization and adoption. The key factors that influence IOMs are budget, regulatory pressure, industrial and technology disruptions, and the organization’s risk appetite. The biggest benefit of IOMs lies in their phased approach, which helps balance short-term priorities, manage risks associated with large transformations and satisfy the expectation of top management to see tangible benefits at regular intervals for every dollar spent.

IOMs help achieve the end goal in a controlled manner. The interaction model will focus more on how the analytics team will work with the business to find, analyze and capture use cases/ requirements from the industry and business units. The decision on the setup – centralized vs. federated – will influence the design of subcomponents.

To succeed with IOMs, organizations need a tested approach that includes the following critical success factors:

- Clear vision around data and analytics.

- Understanding of the problems faced by customers, vendors/suppliers and employees.

- Careful attention paid to influencers.

- Trusted facts and numbers for insights and interpretation.

- Understanding that the organization cannot cover all aspects (in breadth) on the first attempt.

- Avoidance of emotional attachment to the process, or of being too detail-oriented.

- Avoidance of trying to design an operating model optimized for everything.

- Avoidance of passive governance — as achieving active governance is the goal.

Moving forward

Two factors deserve highlighting: First, as organizations establish new business ventures and models to support their go-to-market strategies, their operating models may also require changes. However, a well-designed operating model will be adaptive enough to new developments that it should not change frequently. Second, the data-to-insight lifecycle is a very complex and sophisticated process given the constantly changing ways of collecting and processing data. Furthermore, at a time when complex data ecosystems are rapidly evolving and organizations are hungry to use all available data for competitive advantage, enabling things such as data monetization and insight-driven decisionmaking becomes a daunting task. This is where a robust data and analytics operating model shines. According to a McKinsey Global Institute report, “The biggest barriers companies face in extracting value from data and analytics are organizational.”6 Hence, organizations must prioritize and focus on people and processes as much as on technological aspects. Just spending heavily on the latest technologies to build data and analytics capabilities will not help, as it will lead to chaos, inefficiencies and poor adoption. Though there is no one-sizefits-all approach, the material above provides key principles that, when adopted, can provide optimal outcomes for increased agility, better operational efficiency and smoother transitions.

Endnotes

1 A tool that allows one to describe, design, challenge and pivot the business model in a straightforward, structured way. Created by Alexander Osterwalder, of Strategyzer.

2 Operating model canvas helps to capture thoughts about how to design operations and organizations that will deliver a value proposition to a target customer or beneficiary. It helps translate strategy into choices about operations and organizations. Created by Andrew Campbell, Mikel Gutierrez and Mark Lancelott.

3 First described by Michael E. Porter in his 1985 best-seller, Competitive Advantage: Creating and Sustaining Superior Performance. This is a general-purpose value chain to help organizations understand their own sources of value — i.e., the set of activities that helps an organization to generate value for its customers.

4 The 7S framework is based on the theory that for an organization to perform well, the seven elements (structure, strategy, systems, skills, style, staff and shared values) need to be aligned and mutually reinforcing. The model helps identify what needs to be realigned to improve performance and/or to maintain alignment.

5 ArchiMate is a technical standard from The Open Group and is based on the concepts of the IEEE 1471 standard. This is an open and independent enterprise architecture modeling language. For more information: www.opengroup.org/subjectareas/enterprise/archimate-overview.

6 The age of analytics: Competing in a data-driven world. Retrieved from www.mckinsey.com/~/media/McKinsey/Business%20Functions/McKinsey%20Analytics/Our%20Insights/The%20age%20of%20analytics%20Competing%20in%20a%20data%20driven%20world/MGI-The-Age-of-Analytics-Full-report.ashx

References

https://strategyzer.com/canvas/business-model-canvas

https://operatingmodelcanvas.com/

Enduring Ideas: The 7-S Framework, McKinsey Quarterly, www.mckinsey.com/business-functions/strategy-andcorporate-finance/our-insights/enduring-ideas-the-7-s-framework.

www.opengroup.org/subjectareas/enterprise/archimate-overview

Categories: Consulting

Tags: consulting, dg, framework, model, Operating model, TOM

Comments: No Comments.